Maybe it was an internet rabbit hole, where at 3am on Tuesday morning you stumbled on a video of an M&M serenading you with a Japanese ballad. Maybe it was Kim Jong-Un singing “Witch Doctor”. Or maybe, if you are actively involved in Dunedin News (i.e. over 50), you saw mayor Aaron Hawkins performing Never Gonna Give You Up or I Will Survive.

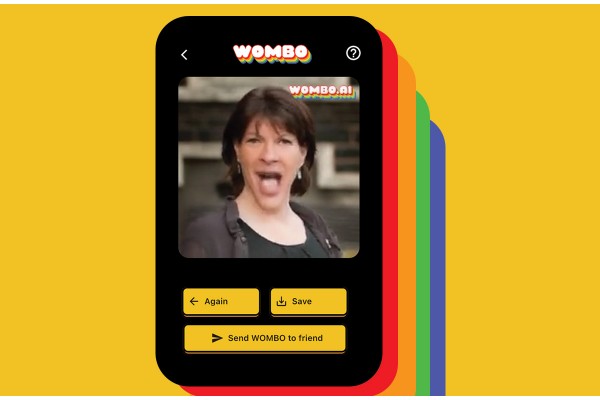

Usually exaggeratingly and somewhat terrifyingly animated using an app called Wombo.ai, the videos are pretty easy to dismiss as a stupid joke. Similar technologies, though, are ripe for abuse, whether mining huge databases of uploaded photos for data, or making videos of people saying things they didn’t (deepfakes).

At first glance, Wombo.ai appears perfectly harmless. Everything about it seems designed for maximum viral value: the unapologetically silly animation, the choice of songs you get (including such bangers as I’m Blue, Everytime We Touch, and YMCA).

Even the origin story seems to fit this narrative. Other tech companies talk a big game of inspiring, world-changing visions, but Wombo’s founder Ben-Zion Benhkin told The Verge that he “had the idea for Wombo while smoking a joint with my roommate”.

The way Wombo works seems simple: the basic motions (the eye flicks, the mouth movements and cheeky grins) are essentially photoshopped onto your picture, with Wombo’s software determining where your facial features are and what editing needs to be done to make it fit.

With similar apps in the past, users have raised concerns with photo privacy. Most famously, FaceApp (the one which made you look older) was found to be uploading and storing the photos users had posted. Under their Terms of Service, they owned every photo edited with the app.

Ben-Zion insists that Wombo takes users’ privacy seriously. Unlike most apps, you don’t need to register with names or emails, and the photos are deleted immediately after your video is created. Wombo makes it clear they don’t own any photos or videos made using their app.

What concerns some experts is what happens after the video is created — when it’s shared to the rest of the world.

In an interview with Radio One, Law Professor Colin Gavaghan said that Wombo’s videos, while unsettling, were “not hyper-realistic”, and that not many would be fooled into thinking they were real. Convincing-looking videos, such as the @deeptomcruise account on TikTok, still need heaps of work to refine the details that stick out to even casual viewers. For examples, there is glitching when an object is moved over a faked mouth. However, with this technology improving extremely quickly, he said “it’s only a matter of time before we’re in a position where a casual observer just can’t tell the difference.”

Stephen Davis, a long-time investigative journalist, expressed concerns that platforms like Wombo could add to the spread of fake news online. “The danger of so many things done online as a joke is that they have the enormous potential to be misused by people who are not making jokes … 10,000 shares down the line, someone is taking it seriously.” He thought there was a risk that, with advances in this technology, a future Putin or Trump “won’t just have to lie in public, they’ll be able to produce the most authentic looking videos which perpetuate their lies”.

Though many of the fears around deepfakes have focused on their political implications, more immediate threats loom. Sensity AI, a research company, has consistently found 90-95% of deepfake content to be non-consensual porn, the vast majority depicting women. BBC News reported in March that a woman generated deepfake images of her teenage daughter’s cheerleading rivals “naked, drinking and smoking” using photos from their social media accounts.

While Wombo itself, birthed in a cloud of cannabis smoke in a Toronto apartment, seems pretty harmless, the broader rise of deepfake technology has some terrifying implications. It makes it easier than ever to make someone look like they did or said something they never did.

“Take your time and think carefully about something before you share,” said Colin. His view was shared by Stephen, who warns: “Your share could be multiplied by a million times, and somewhere in that million people, there will be a number of people who won’t get the joke.”

Listen to Zac Hoffman’s interview at R1 Podcasts.